I’ve seen teams spend heavily on training, then point to completion rates as proof that it worked. If nothing changes on the floor, in the inbox, or on the scoreboard, that spend is just noise. That’s why I treat employee training assessment as a business discipline, not a formality.

Done right, it helps you find gaps before you train, keep learning on track while training is live, and prove what improved after with numbers you can defend. In this guide, I’ll break down when and how to assess, which metrics matter, and the methods that reveal real progress.

You’ll also learn how to turn results into decisions like retraining, coaching, certification, or scaling a program that’s working.

Let’s jump in.

What Is an Employee Training Assessment?

An employee training assessment is a structured way to check whether training is targeting the right gaps and producing the right outcomes. I look at it as a simple question: what should people know or be able to do after training, and how will we verify it?

In practice, it can be a short baseline test before a program starts, quick checks during training to see what’s sticking, and a post-training evaluation to confirm improvement.

Depending on the role, that assessment might measure product knowledge, process accuracy, safety awareness, policy understanding, or role-specific skills like communication and problem solving.

The point isn’t to “test” employees for the sake of it. The point is to create proof you can use to refine the training, support learners who are falling behind, and show leadership what changed because you invested in learning.

When & Why to Assess Employee Training

Training assessments work best when they run like a system: baseline, checkpoints, proof. That structure keeps training from turning into a one-off activity and makes it easier to show what changed because of the time and budget invested.

1. Pre-Training Assessment: Set a Baseline and Target Gaps

Use this to uncover current skill levels and avoid training based on assumptions. A baseline makes it clear who needs training, what they need, and how much improvement is realistic.

Example: Before a customer service training, run a short diagnostic on refund policy, de-escalation steps, and ticket tagging. The results will show whether the gap is policy knowledge, communication, or tool usage.

Action Ideas: Run a job-aligned diagnostic focused on real tasks. Set a baseline score by role or level. Group gaps into topic clusters so training targets the highest-impact weaknesses first.

2. During-Training Assessment: Keep Learning On Track

Use this to catch confusion early, reinforce key concepts, and prevent small gaps from turning into big performance issues later. These checkpoints also reveal whether the training content is clear, relevant, and paced correctly.

Example: In a sales onboarding course, add a 5-question check after the objection-handling module. If most learners miss the same two questions, the script or examples need refinement before moving on.

Action Ideas: Add short checks at the end of each module. Review most-missed questions to spot patterns. Adjust examples, pacing, or reinforcement while the training is still live.

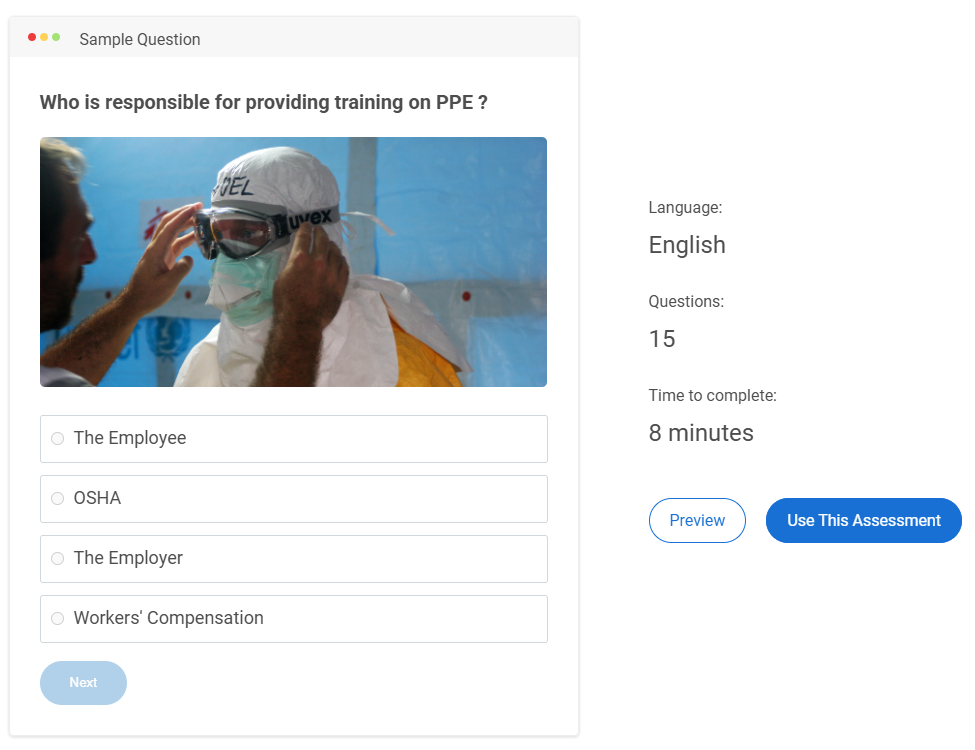

3. Post-Training Assessment: Prove Impact and Readiness

Use this to validate learning gains and confirm employees are ready to apply what they learned in real work situations. It helps separate content that worked from content that needs improvement.

Example: After a safety training, use a scenario-based assessment on PPE selection and incident response. Anyone below the benchmark gets a targeted refresher before returning to high-risk tasks.

Action Ideas: Compare pre vs post results to quantify improvement. Set a role-based benchmark tied to job expectations. Assign targeted coaching or practice tasks to anyone below the threshold.

4. Ongoing Assessments: Reinforce Retention and Compliance

Use this when knowledge must stay current, performance is high stakes, or recertification is required. Ongoing checks reduce knowledge decay, surface retraining needs early, and help keep standards consistent across teams.

Example: For GDPR or HIPAA training, schedule quarterly knowledge checks. If one department repeatedly underperforms on the same topic, it’s likely a workflow or clarity issue, not just a training gap.

Action Ideas: Schedule periodic checks for critical topics. Trigger refreshers by score instead of retraining everyone. Track trends by team to identify systemic gaps that need process fixes.

Assessments become most valuable when results lead to a clear decision, whether that’s updating training content, assigning coaching, certifying readiness, or planning refreshers based on performance data rather than gut feel.

Key Metrics to Measure Training Effectiveness

If you’re running training, sooner or later someone will ask, “Did it work?” These metrics help you answer that with clarity, not opinions.

You don’t need a giant dashboard. You need a small set of numbers that show learning, readiness, and real impact.

1. Knowledge Gain

This compares what employees knew before training to what they know after. It’s your clearest signal that learning happened. The bigger insight comes from topic-level results, because that tells you what the training fixed and what it missed.

Practical Move: Compare pre- and post-training scores and note the weakest topics for the cohort.

2. Pass Rate & Benchmark Readiness

Pass rate matters only if the passing score reflects the job. Use it to decide who is ready to work independently and who needs support before mistakes show up in real work.

Practical Move: Set a role-based benchmark and make certain high-risk topics “must-pass.”

3. Completion Rate

Completion does not prove skill, but it does show whether the training is doable. Low completion usually points to friction, like timing, access, poor manager support, or content that feels irrelevant.

Practical Move: Track completion by team and look for the module where most learners drop off.

4. Knowledge Retention

A follow-up check shows what employees still remember weeks later. This matters most in safety, compliance, and process training where forgetting creates risk.

Practical Move: Run a short check 30 to 60 days after training and refresh only the weak topics.

5. Time to Competence

This measures how quickly employees reach expected performance after training. It’s especially useful for onboarding and role changes, because it connects training to productivity.

Practical Move: Identify a North Star metric for competence and track the time it takes for each cohort to reach that milestone.

6. Behavior & Performance Change

This ties training to the job. Pair assessment results with one or two operational metrics that should improve if learning is applied, like fewer errors, fewer escalations, faster resolution, higher quality scores, or better sales conversions.

Practical Move: Choose one metric to track before and after training and link changes to the topics learners struggled with.

If you want this to stay simple, start with a scorecard: pick two to three metrics for each program, set a benchmark, and decide what happens when results fall short. That turns training assessment into action, not reporting.

Training Assessment Methods That Work

The best method for employee training assessment depends on what you are trying to improve.

If the goal is recall, a knowledge check works. If the goal is judgment, you need scenarios. If the goal is execution, you need a work sample.

Use the list below to choose methods that produce results you can act on, not just scores you can file away.

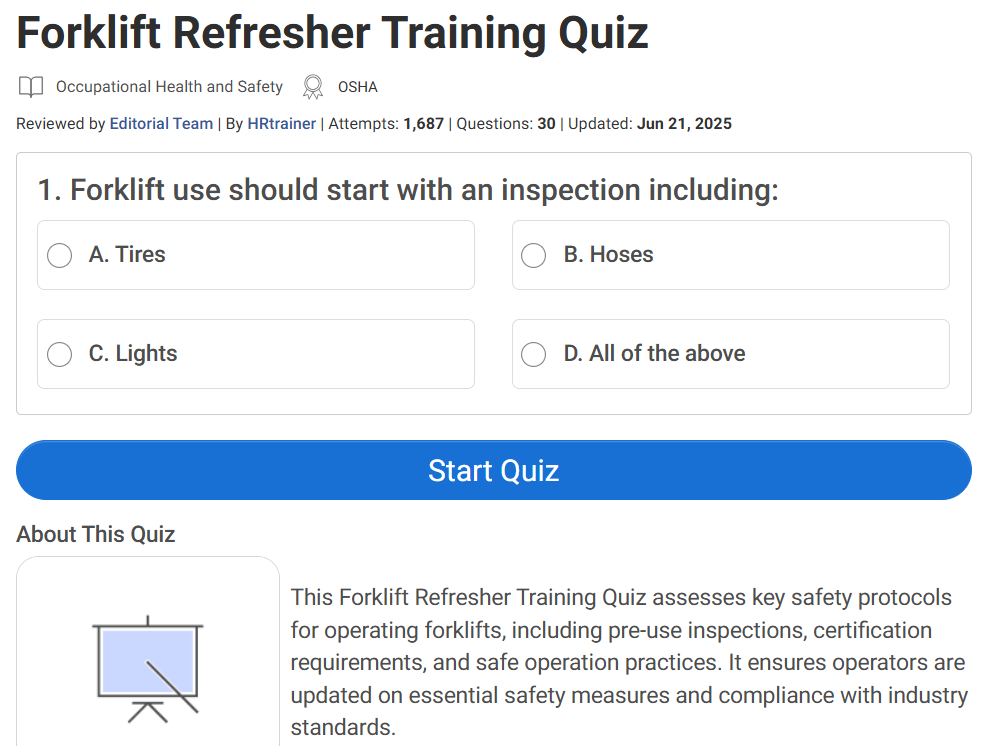

1. Knowledge Checks

Use these when employees must remember specific information, such as policies, product details, procedures, or compliance rules. They are most effective as a baseline before training and as a confirmation after training. Keep them grounded in the job so the score reflects readiness, not test-taking skills.

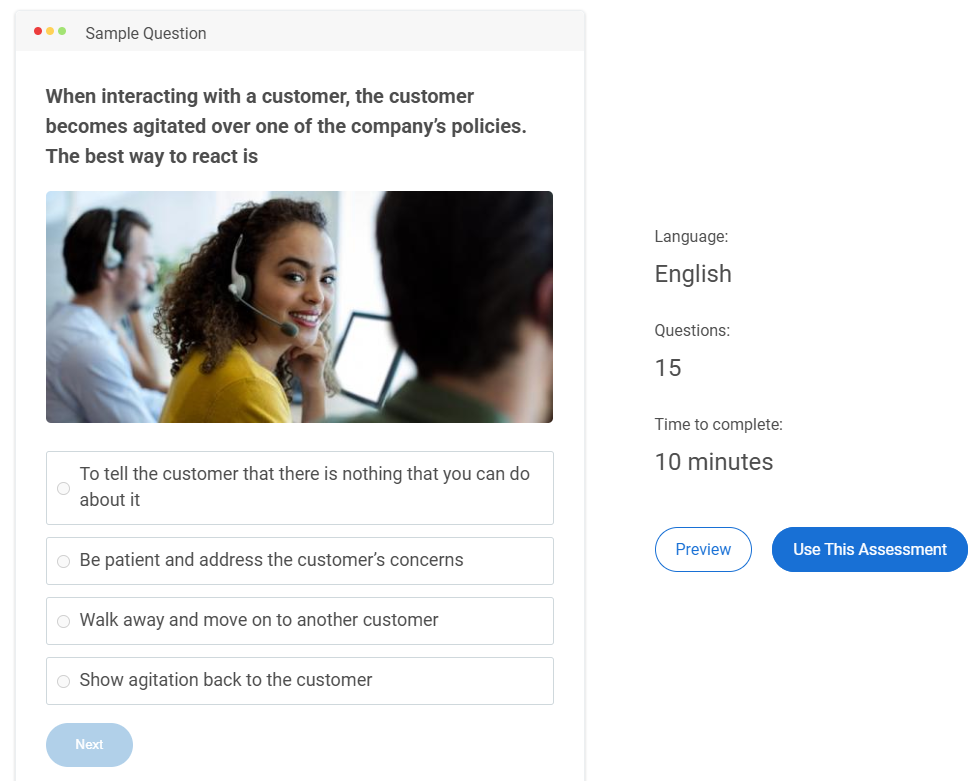

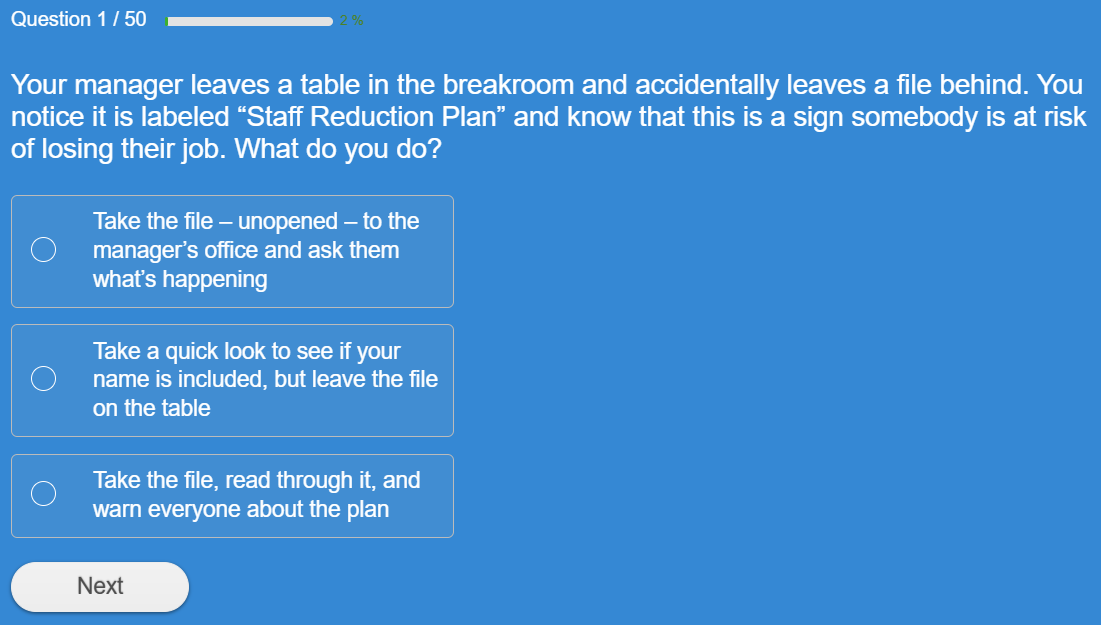

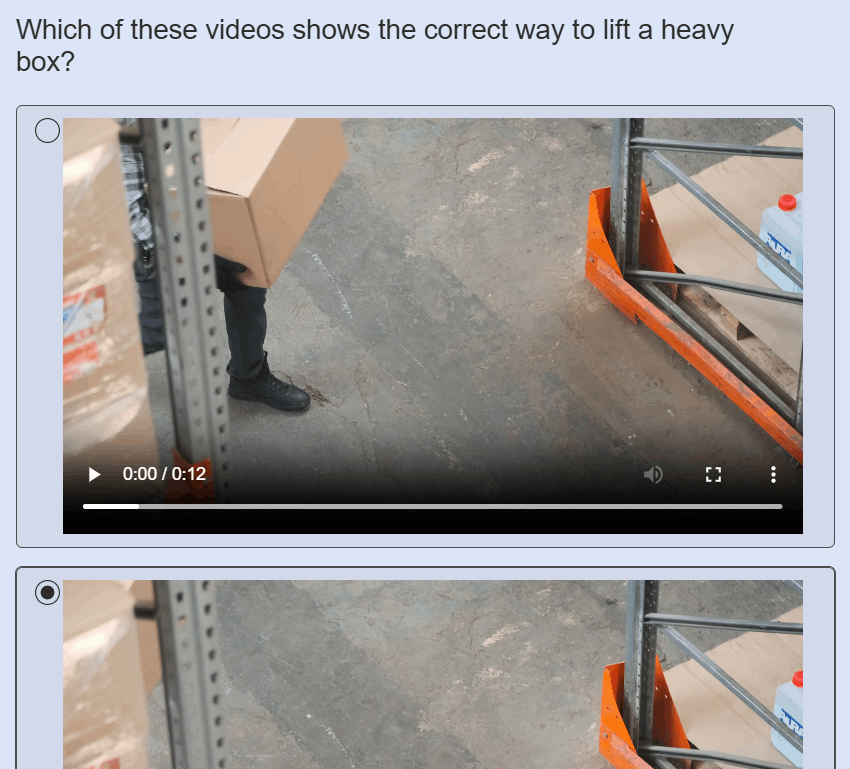

2. Scenario-Based Assessments

Use scenarios when the job requires decision-making, prioritization, and the ability to choose the right response under pressure. This method is ideal for customer support, sales conversations, safety choices, and compliance calls where “knowing” is not enough.

Strong scenarios mirror real constraints, like limited context, competing priorities, or time pressure.

3. Skills Demonstrations & Work Samples

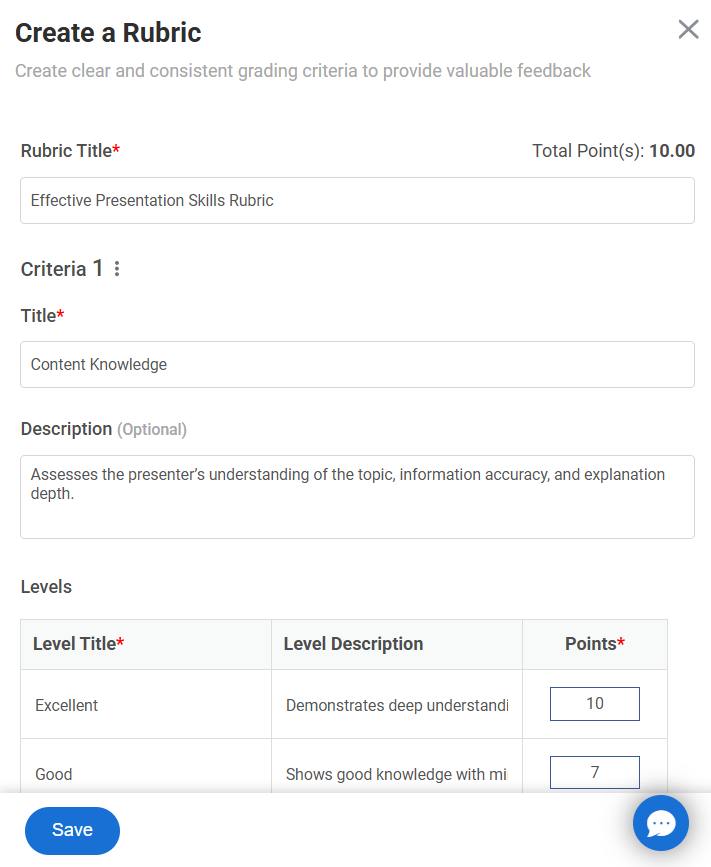

When the training is about doing, not knowing, work samples are hard to beat. Employees complete a realistic task and you score the output. This works well for tool training, documentation, writing, process execution, and quality standards. A short rubric keeps scoring consistent across teams.

4. Live Practice & Role Plays

This method is useful when communication and interpersonal skills are central to the role. It surfaces how employees respond in the moment, not how they answer on a quiz. It is also a strong option for coaching-focused programs, because feedback can be immediate and specific.

5. On-the-Job Observation & Manager Check-Ins

Some outcomes only appear in real work. Observations help confirm whether training changed behavior and whether the new behavior is consistent over time. A short checklist is usually enough. The best version is quick to complete and focuses on a few high-impact behaviors.

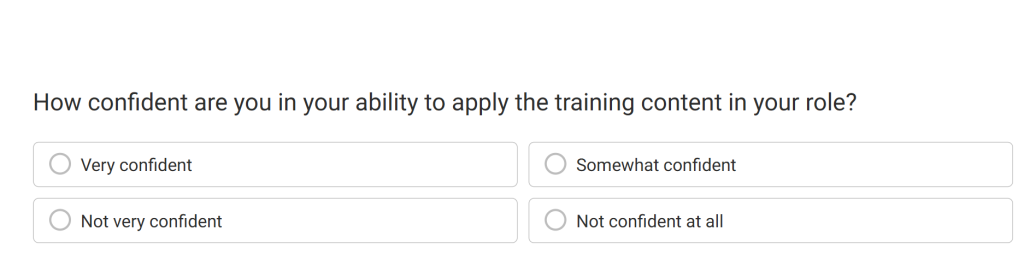

6. Self-Assessments and Confidence Ratings

Self-assessments do not prove competence, but they reveal how employees perceive their readiness. They are most useful when paired with objective results. A mismatch is informative: high confidence with low scores signals risk, while low confidence with high scores signals a coaching opportunity.

7. Feedback Surveys

Training feedback surveys improve the training experience by showing where content was unclear, irrelevant, or too fast. They should support evaluation, not replace it. Use surveys to fix the program and assessments to prove outcomes.

If you want a simple way to choose, start with this: use knowledge checks for recall, scenarios for judgment, and work samples for execution. Add observation when behavior change is the real goal.

FREE. All Features. FOREVER!

Try our Forever FREE account with all premium features!

How to Conduct an Effective Training Assessment

A training assessment is only useful if it helps you make a decision. Who is ready to work independently? What needs reinforcement? Which part of the training is not landing? If the result won’t change anything, skip the assessment and fix the training plan first.

Step 1: Start With the Problem You’re Trying to Solve

Most teams start with the course outline. That’s backwards. Start with what is going wrong in real work.

Common starting points:

- Too many errors or rework

- Slow ramp-up for new hires

- High escalations or repeat customer issues

- Safety incidents or audit failures

Pick one primary outcome for this program. One is enough. It keeps the assessment tight and the results easy to act on.

Step 2: Define What “Ready” Means Before You Measure It

Decide your standards upfront so you don’t end up debating them after the scores show up. Readiness usually needs two layers: an overall benchmark and a few non-negotiables.

A practical way to set it up:

- Overall passing score that signals basic readiness

- Must-pass topics for critical steps (safety, compliance, escalation triggers)

- A clear next step for anyone who misses the mark (coaching, refresher, retake)

Also decide what “almost there” looks like. A small band just below the benchmark can trigger targeted coaching instead of a full retake, which saves time without lowering standards.

Step 3: Pick the Assessment Method That Matches the Skill

A quiz works for rules and facts. It does not prove someone can handle a tough situation or perform a task correctly. Match the method to what the job demands.

- Use short quizzes when accuracy depends on knowing the right steps or policies

- Use scenarios when employees need judgment and the “right answer” depends on context

- Use work samples when training is about doing the task, not describing it

- Use quick manager check-ins when the goal is behavior change on the job

Step 4: Build the Assessment From Real Work, Not Training Slides

The most relevant questions come from real patterns, not the training content itself. Look for where people slip and what that costs you.

Good sources:

- QA rubrics and quality reviews

- Audit findings and recurring compliance gaps

- Escalation reasons and common complaint themes

- Incident logs, near-miss notes, or safety observations

- Manager notes on repeat mistakes

Turn the top issues into questions and scenarios. That’s how the assessment predicts performance instead of just testing memory.

Step 5: Write Questions That Give You Clean Signals

A confusing question creates bad data. Bad data leads to the wrong decision. Keep it straightforward.

- Use the language employees actually use at work

- Avoid trick wording and vague “best answer” options

- Make wrong choices believable, not silly

- Use “what would you do next?” when application matters

Keep it lean. In most cases, 10 to 20 well-written questions or scenarios give a clearer signal than a long test that invites rushing and guessing.

If integrity matters, reduce easy workarounds. Use a question pool, shuffle questions, and avoid showing answers until completion for certification-style tests.

Step 6: Look for Patterns, Then Act Narrowly

Pass and fail is the headline. The useful part is what repeats.

Use results like this:

- If most people miss the same topic, fix that part of the training

- If only a few people struggle, coach those individuals

- If strong performers miss a question, rewrite the question or clarify the material

- If this is a high-impact skill, run a short follow-up check later to see what stuck

A quick way to make this real: imagine a support onboarding program. A baseline assessment shows weak policy knowledge, the training focuses there, the post-training assessment confirms improvement, and a 30-day check catches retention drops in edge-case scenarios. That triggers a short refresher module instead of retraining the entire cohort.

Why Online Training Assessments Are More Effective

Online assessments are more effective because they turn training evaluation into something you can run consistently, at speed, and with cleaner signals. The value is not the “online” part. It’s what that enables.

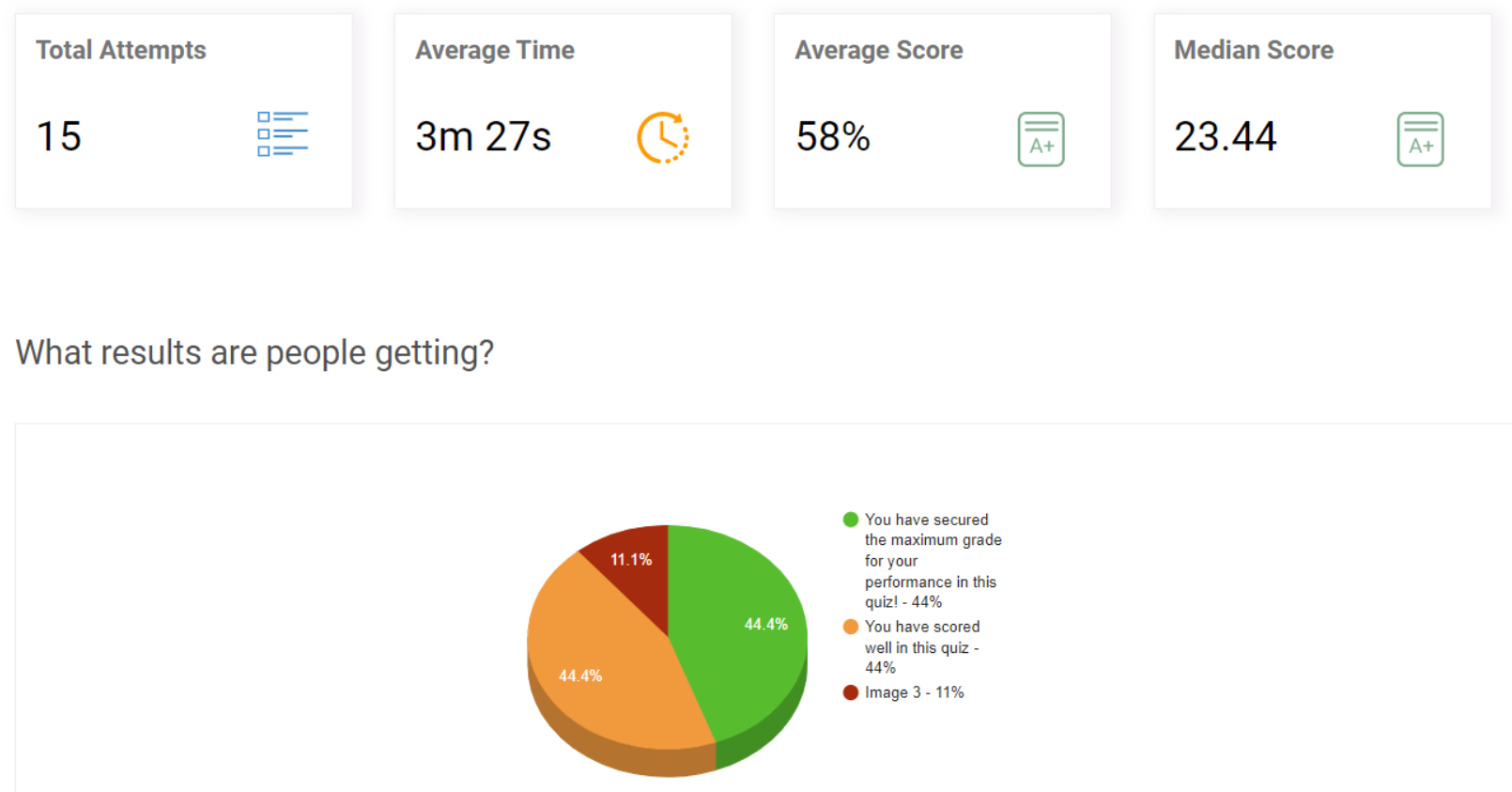

1. They Turn Assessments Into a Feedback Loop, Not a One-Time Event

When assessments are easy to launch and repeat, it becomes realistic to run a baseline, add checkpoints, and follow up later for retention. That’s how training improves over time instead of being rebuilt from scratch each cycle.

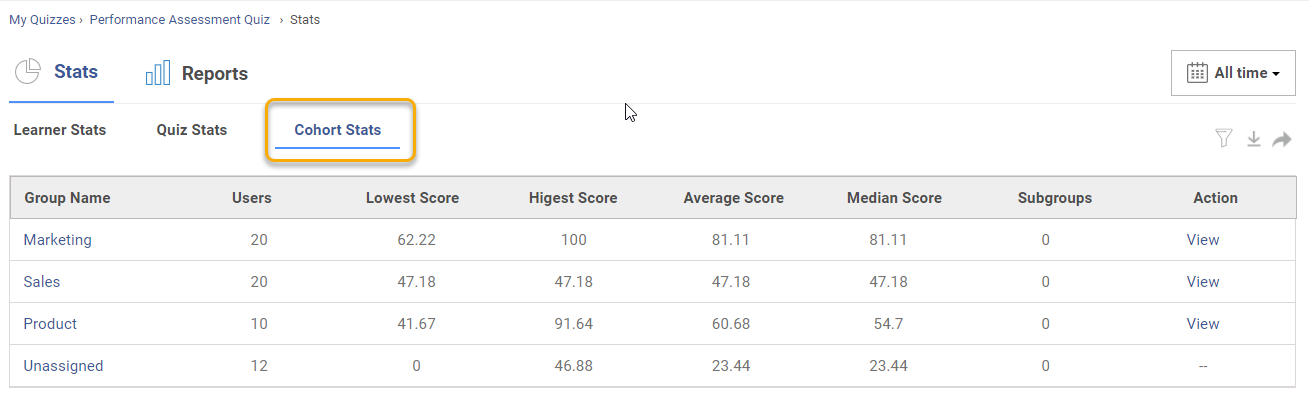

2. They Show You Patterns You Would Otherwise Miss

The real insight is not the average score. It’s what groups struggle with, what topics keep failing, and where learners drop off. Online assessment reporting makes those patterns visible by team, cohort, role, and topic so you can fix the right thing.

3. They Reduce “Assessment Friction,” Which Improves Data Quality

When an assessment is hard to access or time-consuming to grade, people rush, skip, or delay it. Online delivery removes a lot of that friction, which usually leads to more completions and more reliable results.

4. They Let You Scale Without Trading off Consistency

Offline assessment quality changes from one trainer to another. Online assessments make it easier to keep benchmarks, scoring, and evaluation standards consistent across locations and managers.

5. They Support Stronger Integrity Measures When It Matters

If results drive certification or compliance, online tools can reduce easy shortcuts with controlled access, randomized questions, and settings that discourage answer sharing, which keeps the results more trustworthy.

Training Assessment Best Practices

Even a well-planned assessment can fall apart in execution. This section is about the small choices that quietly decide whether your results are reliable, fair, and useful.

1. Keep the Assessment Purpose Clear

If the goal is learning support, build in feedback and use the results to guide practice. If the goal is certification or readiness, keep conditions tighter and score against clear standards. Mixing both goals in one assessment often creates confusion and weak data.

2. Test What Matters Most, Not What’s Easy to Test

It’s tempting to assess only what fits neatly into a quiz. But jobs are messy. Where possible, prioritize questions that reflect real decisions, edge cases, and high-impact steps, even if that means fewer questions overall.

3. Avoid Common Question Design Traps

A few bad questions can distort the whole assessment. Watch for patterns that inflate scores or create accidental failures:

- Vague wording that can be read two ways

- “All of the above” style shortcuts

- Answer options with obvious length or tone tells

- Questions that reward memorizing terms instead of applying knowledge

4. Make Fairness Part of the Design

Assessments should not punish people for background knowledge unrelated to the job. Keep reading level appropriate, avoid cultural references, and use the same standard across cohorts unless role risk clearly differs.

5. Don’t Let Reporting Stop at Averages

Average scores hide the real story. The most useful views are usually:

- Topic-level performance to see what content is not landing

- Distribution of scores to spot who is struggling vs who is excelling

- Item-level performance to catch confusing questions early

6. Treat Assessment Data as a Living Signal

If your assessment stays unchanged for a year, it slowly becomes less aligned with the work. Update questions when policies change, tools evolve, or real-world failure patterns shift. Small, frequent updates beat big rewrites.

7. Protect Credibility When Stakes Are High

If results affect certification, promotions, or compliance sign-off, the assessment needs stronger controls. Keep access controlled, reduce easy answer sharing, and document benchmarks clearly so results hold up in reviews.

Prove Training Works, Then Scale What Delivers

Training pays off only when it changes what people do on the job. The simplest way to get there is to treat assessment as a repeatable cycle, not a one-time test. Set a baseline before training starts. Add quick checks during delivery. Confirm readiness after. Then run a short follow-up later for the skills that matter most.

If you take one action today, pick one training program and define two success signals, like fewer errors and faster time to competence. Run a pre-test and post-test, review topic-level gaps, and make one improvement to the training based on what learners missed.

When you want to roll this out across teams, ProProfs Quiz Maker can help you move faster. You can use AI to generate questions, build quizzes from existing content, apply integrity settings, and review reports that make next steps obvious.

Frequently Asked Questions

How do you assess training effectiveness?

Start by comparing pre-training and post-training scores to measure knowledge gain. Then connect the results to one on-the-job metric that should improve if training is applied, such as fewer errors, higher quality scores, faster time to competence, or fewer escalations.

What are the four types of training evaluation?

The four types are commonly explained through the Kirkpatrick model: reaction (how learners felt about the training), learning (what they learned), behavior (what changed on the job), and results (the business impact). A strong assessment strategy focuses most on learning, behavior, and results, not just reaction.

What are the five steps of training needs assessment?

A practical five-step approach is: identify the business goal, define the skills required to reach it, assess current skill levels, prioritize gaps based on impact and risk, and choose the right training plan to close those gaps. This keeps training focused on outcomes instead of assumptions.

What are the three types of training needs assessment?

The three types are organizational (what the business needs), task or job (what the role requires), and individual (what the employee is missing). Using all three helps you avoid generic training and target the right people and skills.

We'd love your feedback!

We'd love your feedback! Thanks for your feedback!

Thanks for your feedback!